Building Autonomous Software Teams with Agentic AI

Most engineering leaders don’t wake up thinking, “I want an autonomous software team.”

What they usually want is simpler—and harder at the same time: fewer handoffs, fewer late-night incidents, and a development process that doesn’t fall apart the moment things get busy.

That’s where Agentic AI software development quietly enters the picture.

Not as a replacement for engineers. Not as a magic productivity hack. But as a way to shift responsibility for execution-heavy decisions from humans to systems, while humans stay focused on direction, trade-offs, and outcomes.

This guide is written for teams who are past the hype stage and are asking a more practical question:

How do we actually build autonomous software teams using Agentic AI—and not regret it later?

TL;DR (For Skimmers with Production on Fire)

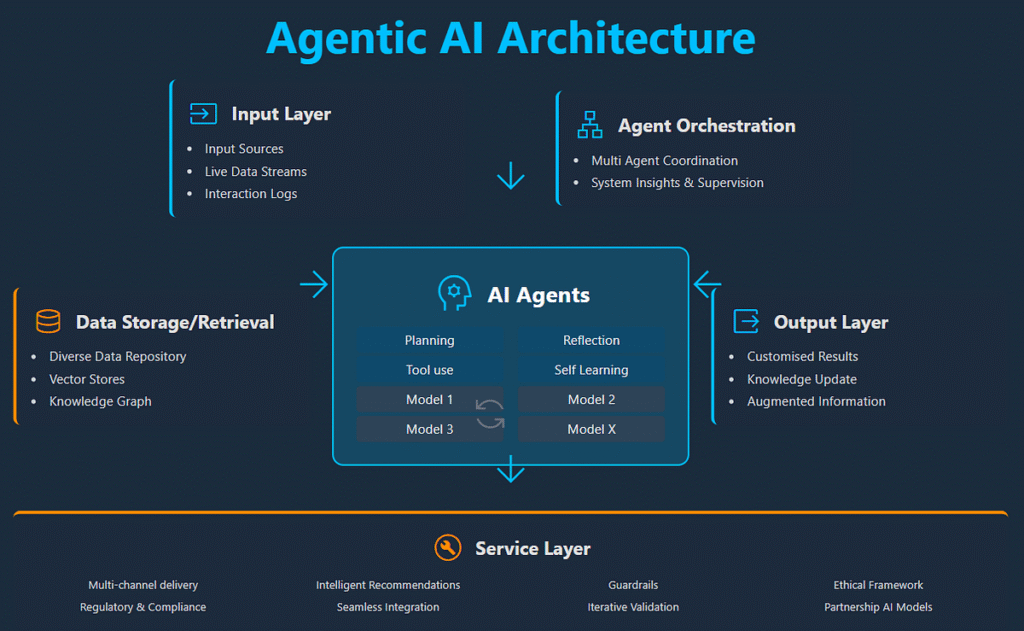

- Agentic AI enables autonomous software teams by introducing goal-driven AI agents into SDLC workflows

- These agents act as virtual developers, testers, and DevOps engineers

- The real value isn’t code generation—it’s coordination, decision-making, and self-correction

- Multi-agent collaboration is what makes autonomy scalable

- Governance and human-in-the-loop controls are non-negotiable

- Adoption works best as a maturity journey, not a big-bang rollout

From Tools to Teammates: Why Agentic AI Changes the Team Model

Traditional AI tools sit on the sidelines. You call them when you need something.

Agentic AI doesn’t wait to be called.

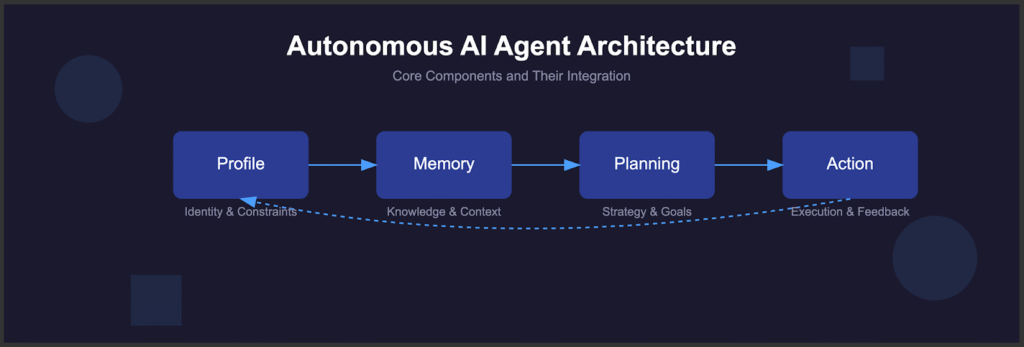

In an autonomous setup, AI agents:

- Observe system state

- Decide what matters

- Act without explicit prompts

- Learn from outcomes

That’s a fundamental shift. You’re no longer “using AI.” You’re working alongside it.

This is why autonomous software teams feel less like automation projects and more like organizational change initiatives.

AI Agents as Virtual Team Members (Not Just Utilities)

Virtual Developers

These agents don’t just generate code. They:

- Understand repository context

- Refactor when complexity spikes

- Enforce architectural constraints

- Coordinate changes across services

Think of them as junior engineers with perfect memory and no ego—but also no intuition unless you teach it.

Virtual Testers

AI agents in testing:

- Generate tests from system behavior, not specs

- Detect flaky tests early

- Trace failures back to likely causes

- Adjust coverage dynamically

Testing stops being something you “finish” and becomes something that’s always happening.

Virtual DevOps Engineers

This is where AI-driven DevOps starts to feel real.

Agents:

- Decide when to deploy

- Predict failure risk

- Trigger rollbacks automatically

- Optimize infrastructure usage

Pipelines stop following rules. They follow intent.

Multi-Agent Collaboration: Where Autonomy Actually Scales

One agent alone is useful. Multiple agents working together is where things get interesting—and dangerous if poorly designed.

Common Collaboration Models

| Model | How It Works | When It Fits |

| Central Orchestrator | One agent coordinates others | Early-stage adoption |

| Peer-to-Peer | Agents negotiate tasks | Complex systems |

| Hierarchical | Senior agents guide specialists | Enterprise scale |

In real projects, teams often mix these patterns.

The key isn’t the structure. It’s shared context and clear authority boundaries.

Agentic AI Across the Development Lifecycle (Practically Speaking)

Code Generation & Refactoring (The Obvious Part)

Yes, AI agents can generate code. That’s table stakes now.

The more interesting part is refactoring:

- Agents monitor complexity metrics

- Detect architectural drift

- Propose or apply refactors before things break

That’s how technical debt gets managed instead of ignored.

Automated Testing & Bug Resolution

In autonomous setups:

- Agents generate tests after code changes

- Failures are analyzed, not just reported

- Fixes are proposed—or sometimes applied automatically

Humans step in when judgment is needed. Not for every red build.

Continuous Deployment & Rollback Decisions

This is where trust gets tested.

Agentic systems:

- Assess deployment risk in real time

- Delay releases when signals look wrong

- Roll back before users notice

It’s uncomfortable at first. Then it becomes hard to live without.

Performance Monitoring & Self-Healing Systems

In production, agents:

- Correlate logs, metrics, and traces

- Detect anomalies early

- Trigger remediation workflows

- Learn from every incident

The goal isn’t zero incidents. It’s less chaos when they happen.

The Tooling Ecosystem (Framework-Level View)

This isn’t about specific vendors.

Most Agentic AI software development stacks include:

- Agent orchestration frameworks

- Shared memory/context stores

- Observability layers

- Policy and guardrail engines

- Integration layers for CI/CD and infra

If your tooling can’t explain why an agent acted, it’s not ready for autonomy.

Risks, Limitations, and Hard Truths

Let’s be honest. Agentic AI can fail loudly.

Real Risks Teams Run Into

- Over-automation without visibility

- Conflicting agent decisions

- Silent performance regressions

- Security blind spots

These aren’t theoretical. They happen.

Governance & Human-in-the-Loop Strategies

Successful teams:

- Define clear autonomy boundaries

- Require human approval at key decision points

- Log every agent decision

- Review agent behavior regularly

Autonomy without governance isn’t innovation. It’s negligence.

A Practical Maturity Roadmap for Adoption

This is where most advice gets unrealistic. Here’s what actually works.

Stage 1: Assisted Autonomy

- Agents suggest actions

- Humans approve everything

- Focus on learning, not speed

Stage 2: Bounded Autonomy

- Agents act in low-risk areas

- Clear rollback mechanisms exist

- Humans monitor outcomes

Stage 3: Conditional Autonomy

- Agents act independently within policies

- Humans intervene on exceptions

- KPIs guide expansion

Stage 4: Scaled Autonomy

- Multi-agent coordination

- Self-healing workflows

- Humans focus on strategy, not execution

Skipping stages usually backfires.

How This Fits with the Bigger Picture

This article builds on the conceptual foundation covered in

“Agentic AI Systems in SDLC: Autonomous Decision-Making Across Modern Software Engineering”

And if you’re looking for hands-on guidance, the implementation framework lives here:

How to Use Agentic AI in Software Development Lifecycle

Both are worth reading before making architectural decisions you’ll live with for years.

Why This Becomes a Competitive Advantage

Autonomous software teams don’t just ship faster. They recover faster, learn faster, and scale without burning people out.

That’s hard to copy.

Agentic AI isn’t about doing more work. It’s about letting systems handle decision density so humans can focus on what still requires judgment.

Teams that get this right won’t just deliver software better.

They’ll operate differently.

And over time, that difference compounds.